I eventually had to throw away my feverish rant about Cookie Clicker, as I felt it contained spoilers for both the video game industry and life Itself, so instead here's what I've been up to Unity-wise these past weeks.

With a few desultory mouse-clicks, I had ported my web-targeted 2d platformer to Android. Well, the appearance of it anyhow. It was still only set up to take keyboard input. No worries though, since the first step was such a breeze, implementing a simple set of onscreen controls should be a similar walk in the park, yes?

Well the first problem, when the touch input examples I copied didn't work, was that I was staring at a phone, one that wasn't responding to input, and I had no access to a debug console. That had to change first thing.

I spent a good many hours trying to rig up live debugging with various IDEs, sdk versions, phone drivers, and even a third party Android phone Windows driver updater, which informed me that my phone's OS was "not compatible with Windows 8 x64" and since that's what they had at Fry's, even after I asked the guy to look in the back for any Windows 7 machines he might have overlooked, that's what we're rolling with.

After a while Eclipse deigned to recognize my HTC Incredible, which I guess isn't all that incredible anymore, and I started hacking away at it. How could I set up a control scheme that would slot easily into the existing movement controls of the game I was already making? A quick check showed I was moving the player with Input.GetAxis("Horizontal") multiplied by some arbitrary speed value, so I was looking to replicate, with these two touchable arrows, a continuum between -1 and 1, which is what GetAxis("Horizontal") gives you.

So I pictured a finely grained number line clamped at -1 and 1, and set about adding or subtracting tiny numbers to and from it, with lots of little special cases like snapping it to zero when you switch directions, and letting the value drift back toward zero when not being touched. I eventually had to start learning about touch phases, but that took a while to sink in.

My first pass was full of very long functions full of if trees calling for specific global variables not mentioned elsewhere in the function, just a god awful mess, pure flailing.

Second pass was attempting to extend the Rect class as a custom UI object (lol why I dunno). This lead me to learn what Sealed means, and got a slightly better understanding of "the Unity Way", which involves hanging scripts and components on GameObjects like baubles on a Xmas tree, so I was going about the whole thing backwards. The problem with this was that the Rects in which I was drawing the textures only existed as concepts, created by a few OnGUI.Box calls, nothing you could click on in the Inspector. The Xmas tree only gets put up at runtime, so anything you want to hang on it also has to be defined and described in script.

Eventually I had painted myself into a corner. I'm finding as this goes on that one of the important skills to master (and boy can this ever be generalized) is understanding when you're at the end of a first draft and can, not set aside or abandon that work, but tape it up to the wall or throw it onto another monitor and refer to it when building your second draft, trying to incorporate its strengths while paring out its flaws. Iteration.

I started over and thought in bigger picture terms about what the thing was trying to do. I remembered one of the lessons from a videos I watched: when you're trying to perform a complicated operation, if you spend the right amount of time and effort figuring out what your individual functions should each be and do, the actual guts of any of them turn out to be not so complicated. Organization.

Unfortunately the third attempt quickly accumulated a bunch of variables and calculations that rightly should belong elsewhere, so eventually it had to be scrapped as well. I approached the fourth draft with a flinty-eyed stare. There would be four public bools, readable but not writeable by any and every class who cared to use them. Each bool would correspond to one UI button, and the bool would indicate whether right now, at this moment, that button is receiving something that we want to interpret as a touch. All this class does is sort through all the touches by phase and turn those bools on and off as needed. Here it is:

using UnityEngine;

using System.Collections;

public class touchControls : MonoBehaviour {

//assign 128px textures in inspector

public Texture leftArrowTex;

public Texture rightArrowTex;

public Texture greenButtonTex;

public Texture yellowButtonTex;

//consumable button state values

public bool leftFlag

{

get{return left;}

}

public bool rightFlag

{

get{return right;}

}

public bool greenFlag

{

get{return green;}

}

public bool yellowFlag

{

get{return yellow;}

}

private bool left;

private bool right;

private bool green;

private bool yellow;

private Rect[] Arrows;

private Rect[] Buttons;

private Rect leftArrow;

private Rect rightArrow;

private Rect greenButton;

private Rect yellowButton;

private float sw; //screen width

private float sh; //screen height

private float bu; //boxUnit, default box measurement

private float au; //arrowUnit, default arrow measurement

private Vector3 touchPos; //touch input gives us this

private Vector2 screenPos; //gui rects need this

private string debugStr;

void Start () {

sw = Screen.width;

sh = Screen.height;

bu = 256;

au = 128;

leftArrow = new Rect(0, sh-au, au, au);

rightArrow = new Rect(au, sh-au, au, au);

greenButton = new Rect(sw-(au*2), sh-au, au, au);

yellowButton = new Rect(sw-au, sh-au, au, au);

Arrows = new Rect[]{leftArrow, rightArrow};

Buttons = new Rect[]{greenButton, yellowButton};

debugStr = "LEFT =" + leftFlag + "\nRIGHT =" + rightFlag

+ "\nGREEN =" + greenFlag + "\nYELLOW =" + yellowFlag;

}

void Update () {

HandleArrows();

HandleButtons();

debugStr = "LEFT =" + leftFlag + "\nRIGHT =" + rightFlag

+ "\nGREEN =" + greenFlag + "\nYELLOW =" + yellowFlag;

}

void OnGUI () {

GUI.Box(new Rect(sw/2-(bu/2), sh/2-(bu/2), bu, bu), debugStr);

GUI.Box (leftArrow, leftArrowTex);

GUI.Box (rightArrow, rightArrowTex);

GUI.Box(greenButton, greenButtonTex);

GUI.Box(yellowButton, yellowButtonTex);

}

void HandleArrows()

{

if (Input.touchCount > 0)

{

foreach (Rect rect in Arrows)

{

foreach (Touch touch in Input.touches)

{

touchPos = touch.position;

screenPos = new Vector2(touchPos.x, sh-touchPos.y);

if(rect.Contains(screenPos))

{

if (rect == leftArrow)

{

if (touch.phase == TouchPhase.Ended)

{

left = false;

}

else

{

left = true;

}

}

if (rect == rightArrow)

{

if (touch.phase == TouchPhase.Ended)

{

right = false;

}

else

{

right = true;

}

}

}

else //touches recorded outside this rect turn it off

{

if (rect == leftArrow)

{

left = false;

}

if (rect == rightArrow)

{

right = false;

}

}

}

}

}

}

void HandleButtons()

{

if (Input.touchCount > 0)

{

foreach (Rect rect in Buttons)

{

foreach (Touch touch in Input.touches)

{

touchPos = touch.position;

screenPos = new Vector2(touchPos.x, sh-touchPos.y);

if(rect.Contains(screenPos))

{

if (rect == greenButton)

{

if (touch.phase == TouchPhase.Began)

{

green = true;

}

else

{

green = false;

}

}

if (rect == yellowButton)

{

if (touch.phase == TouchPhase.Began)

{

yellow = true;

}

else

{

yellow = false;

}

}

}

}

}

}

}

}

the concept behind the two main sort of mirrored functions is that we want to record touches on the arrows if we are in any phase except TouchPhases.Ended, and we want to record touches on the buttons only if we are in TouchPhases.Began. This gives us the desired behavior where you can press and hold the arrows for continuous input, but the buttons must be tapped.

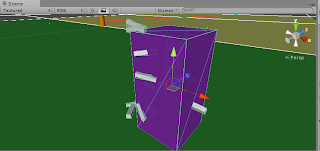

Here's the script on the cube in the middle of the screen that moves and shoots smaller cubes, as an example of how the flags are used. In both cases of course you'd have to set up your assets in the inspector.

using UnityEngine;

using System.Collections;

public class moveCube : MonoBehaviour {

private touchControls hud;

private Vector3 cubeUpdate;

private int vroom;

public GameObject zapFab;

private GameObject zapClone;

private float nextCubex;

private float recoilTimer;

private float recoilCap;

private float haxis; //horizontal axis buckent

private float haxunit; //unit for altering teh bucketprivate bool justShotFlag;

// Use this for initialization

void Start () {

hud = GameObject.Find("Main Camera").GetComponent<touchControls>();

vroom = 50;

//justShotFlag = false;

haxunit = 0.1f;

}

// Update is called once per frame

void Update () {

haxis = Round(haxis);

if (hud.greenFlag){

OnGreen();

}

if (hud.leftFlag)

{

if (haxis > 0){ haxis = 0; }

haxis -= haxunit;

}

if (hud.rightFlag)

{

if (haxis < 0){ haxis = 0; }

haxis += haxunit;

}

if (!hud.rightFlag && ! hud.leftFlag){

if (haxis > 0) {haxis -= (haxunit);}

if (haxis < 0) {haxis += (haxunit);}

}

//clamp haxis at ends

if (haxis > 1.0f){haxis = 1.0f;}

if (haxis < -1.0f){haxis = -1.0f;}

//clamp haxis to zero at very close values to avoid stutters

if (haxis > 0 && haxis < 0.001f){ haxis = 0;}

if (haxis < 0 && haxis > -0.001f){ haxis = 0;}

//move cube

nextCubex = transform.position.x + haxis*vroom;

if (nextCubex > 486.0f){ nextCubex = 486.0f;}

if (nextCubex < -486.0f){ nextCubex = -486.0f;}

cubeUpdate = new Vector3(nextCubex, transform.position.y, transform.position.z);

transform.position = cubeUpdate;

}

void OnGreen()

{

zapClone = Instantiate(zapFab, transform.position, transform.rotation) as GameObject;

}

//helpas

float Round(float num)

{

float rum;

rum = Mathf.Round(num * 1000)/1000;

return rum;

}

}

OK, now we have a crude sort of Zaxxon clone, with no enemies, but we've demonstrated the touch input setup, so we're ready to integrate this with our platformer, right? We can set this demo aside, correct? Damn, I forgot how fun Zaxxon is... even without any bad guys it's kind of awesome... I mean how long would it take, really, to plug in a few columns that explode when you shoot them, maybe an enemy or two... in fact, I think I may have some innovative ideas that could advance the whole Zaxxon-like genre... I could always jump back to the platformer any time... This is like that part in the novel writing process where you start getting all these amazing ideas for other novels. Discpline, they say, is its own reward. We'll see.